Mixed reality “command table” research space

Our Command Space facility was originally designed in collaboration with BAE Systems to support Advanced Mixed Reality Command & Control research. Since that early work, the “Command Table” research has been extended to investigate novel Mixed Reality interfaces for projects as diverse as cardiac anatomy, underwater mine detection and counter-measures, and a “remote science station” concept to allow schoolchildren and the public to engage with the transatlantic sailing, in 2020, of the Mayflower Autonomous Ship.

As well as a range of head-mounted displays and interactive hand controllers, this facility also includes two Motion Capture (MOCAP) rigs supporting a wide range of research projects, including the tracking of VR/AR users’ body, head and arm positions and generating realistic movements for the animation of virtual humans (“avatars”) used in defence and heritage projects.

The first rig takes the form of a twelve-camera OptiTrack Flex 13 system (six mounts hosting two cameras each), with each camera possessing 1.3 million pixels of resolution, a 56° field of view and an update rate of 120 frames per second sample rate. Using wearable, custom-made reflective markers, the Flex 13 is capable of providing accurate MOCAP records of multiple actors within a medium-sized room. For VR/AR studies, those same markers may be mounted onto the faceplate of a VR/AR headset or gloves.

For smaller volume, short-range applications, a second rig consists of an OptiTrack V120 Trio sensor. This is a stand-alone optical tracking system consisting of three small digital cameras and is capable of “plug-and-play” position and orientation capture (in all six degrees of freedom), again using custom-made markers mounted onto the faceplate of a VR/AR headset or gloves. The main computer servicing this facility is a Dell Alienware Area 51 tower desk-side PC.

Reconfigurable enclosure lab for mixed reality training research

Our Mixed Reality Lab is home to a reconfigurable inflatable enclosure, designed to support research addressing the development of rapidly-deployable Mixed Reality training facilities for the defence sector, including, but not restricted to, defence paramedic or MERT (Medical Emergency Team Training) for the Royal Centre for Defence Medicine.

The enclosure was originally modelled using the internal dimensions of a section of an RAF Chinook helicopter, but, by incorporating various real-world objects and integrating those objects with VR and AR scenarios, it is possible to develop a wide range of Mixed Reality experiences for training research and development, and for testing new interactive technologies.

The inflatable enclosure consists of an adjustable frame which, together with range of rigid fixing points built into the structure can be use to attach various items of equipment, from VR headset trackers to small surveillance cameras. The enclosure can be inflated and deflated in around 20 minutes using a single hand-held air blower. The “instructor’s station” (which also supports development activities) consists of a Dell Alienware Area 51 tower desk-side PC and a Samsung 49-inch CHG90 curved, ultra-wide display delivering a 178-degree viewing angle capable of displaying actual and virtual activities undertaken within the enclosure and “triggering” other events, such as the displayed data on a virtual life signs monitor.

Next generation cockpit test bed

We originally designed the The Next-Gen Cockpit test Bed to support research addressing Mixed Reality technologies and their potential for designing concept human-system interfaces for pilots and mission specialists onboard future combat aircraft (a BAE Systems-EPSRC Industrial CASE PhD Studentship).

The test bed is based on a simple cockpit environment (with an Actual RAF Tornado aircraft canopy!). The aim is to place participants into an environment where their postures could be constrained during experiments. However, despite its overtly cockpit appearance, when combined with VR and AR technologies, the test bed can be used for all manner of simulation-based research projects, from future railway locomotive cabs to F1 racing cars, or from special military platforms to automobiles.

The test bed is based on a simple cockpit environment (with an Actual RAF Tornado aircraft canopy!). The aim is to place participants into an environment where their postures could be constrained during experiments. However, despite its overtly cockpit appearance, when combined with VR and AR technologies, the test bed can be used for all manner of simulation-based research projects, from future railway locomotive cabs to F1 racing cars, or from special military platforms to automobiles.

The baseline control configuration is a typical HOTAS arrangement (Hands On Throttle-and-Stick), a common feature within present-day combat aircraft whereby a range of buttons and switches are located on the throttle and control column or flight stick, allowing the users to interact with cockpit functions without removing their hands. However, other interaction technologies can also be integrated, ranging from gesture and touchscreen controls to eye-tracking and even brainwave monitoring devices. The test bed also supports most of the present-day commercial off-the-shelf VR and AR head-mounted displays. The test bed is powered by a Dell Alienware Area 51 tower desk-side PC.

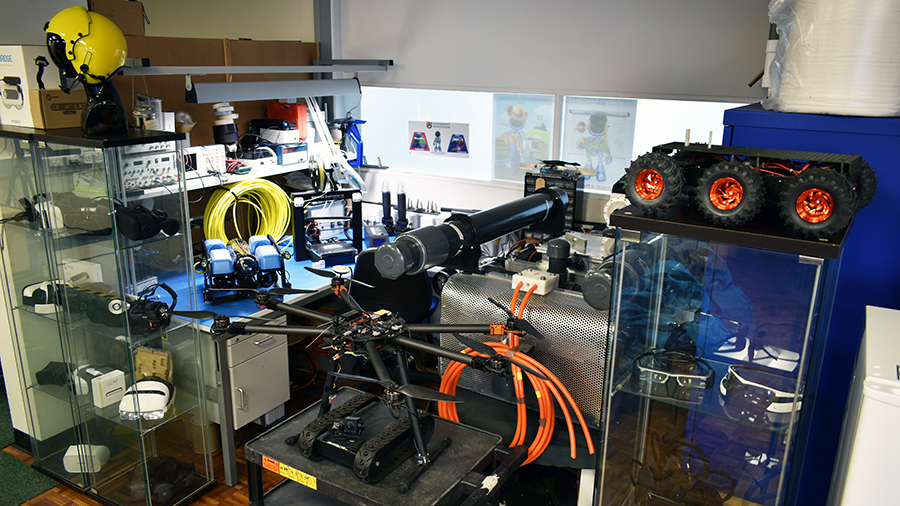

Wearable Technologies, Unmanned Systems and Special Projects Engineering Space

A compact facility exists for the assembly, test and maintenance of our air, land, surface and subsea telerobots. We use the space for integration, repair and maintenance of VR and AR equipment. We also 3D print various objects, including sensor attachment modules for wearable technologies novel concepts for interactive hand controllers and even recreations of fossils.

The HIT Team 'tech corner'