Summary

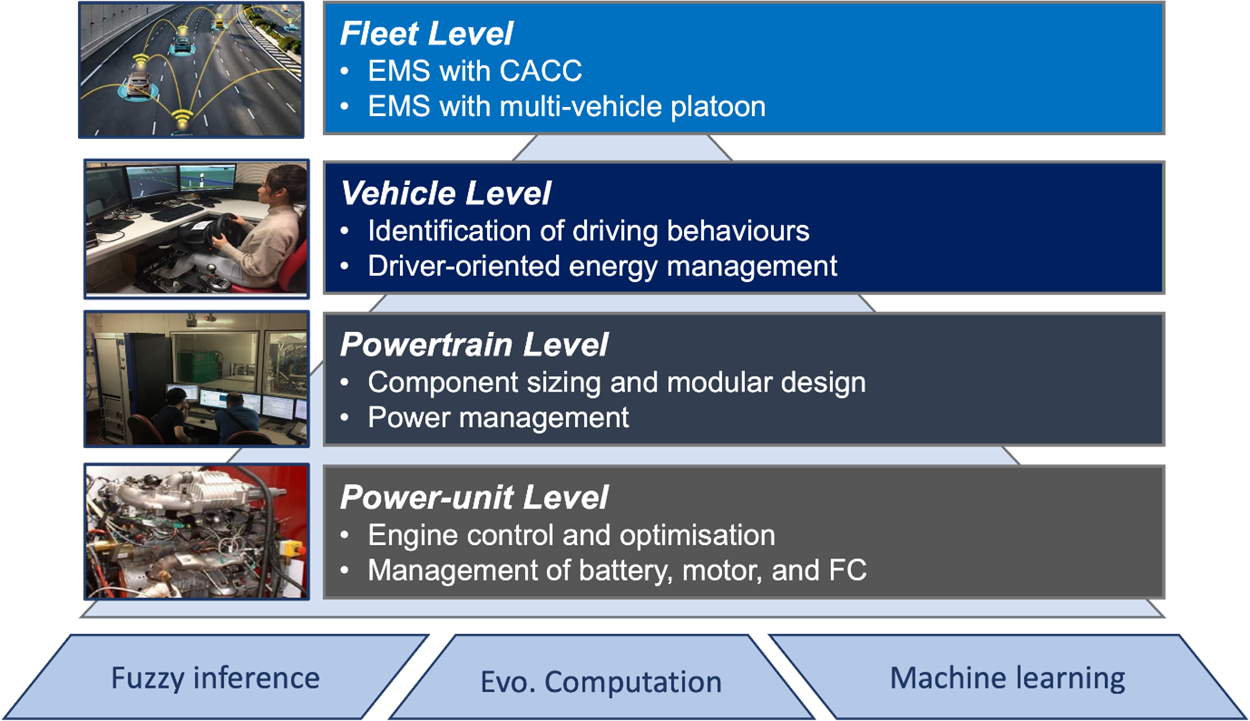

Dr Zhou aspires to harness the emerging power of AI to reshape vehicle design and control, helping attain a more sustainable society. His research interests include fuzzy inferences, evolutionary computation, deep and reinforcement learning, and their applications in automotive engineering. With a track record of more than 70 research papers published in international journals (e.g., IEEE Transactions on Neural Networks and Learning Systems, IEEE Transactions on Industrial Informatics) and conference proceedings and 9 patent inventions, Dr Zhou has gained recognition from industry and academia. He has close collaboration with several world-leading research institutes, e.g., EU Joint Research Centre, Nanyang Technological University, Tsinghua, RTWH Aachen.

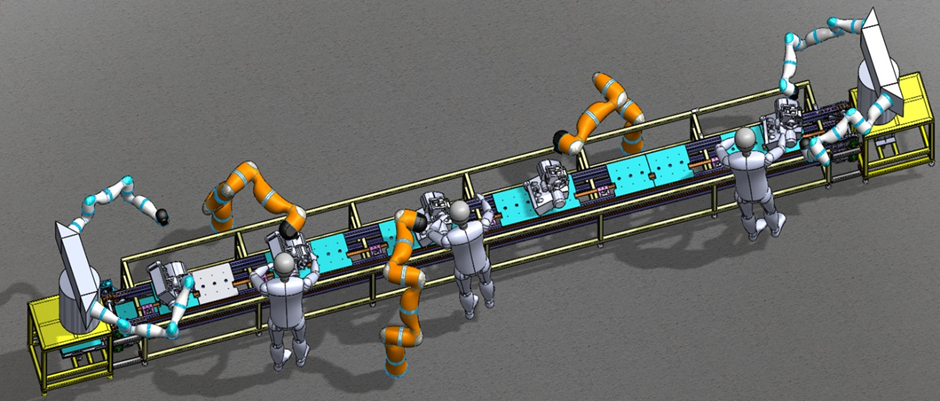

The future vehicles will be connected, automated, shared and electrified (CASE) through the emerging Internet of Vehicles. My research aims to make a timely contribution to zero-emission transport through advanced modelling and control of large-scale electric vehicle platoons. The research is conducted based on the state-of-the-art X-in-the-loop testing facility (e.g., AVL Testbed.Connect, AVL PUMA, ETAS LABCAR, IPG-CarMaker) and the research outcome will include an open-source 3D driving environment and onboard control software for real-time multi-objective (energy economy, durability) optimal control.

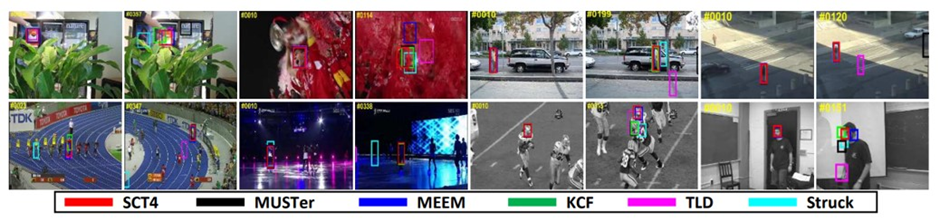

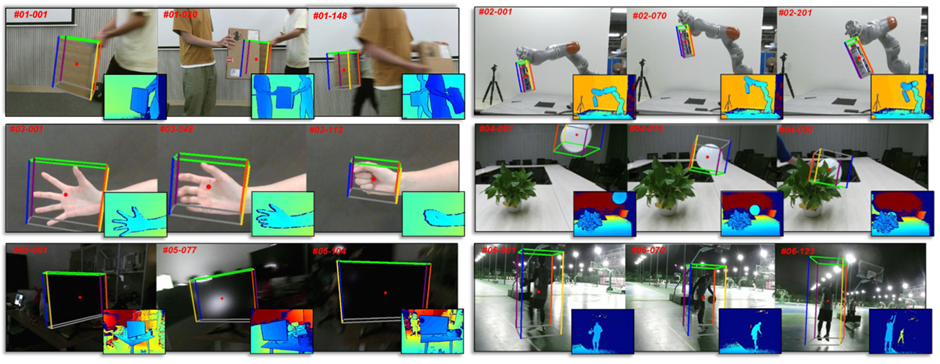

At the core of this research is the development of a multi-agent reinforcement learning algorithm which is capable of environment perception and decision-making from two main dimensions, i.e., a deep inspection of vehicle powertrain systems (e.g., battery system or fuel cell system) and a global interaction with the traffic infrastructure and the vehicles driving around. We will examine the robustness, reliability, and feasibility of the developed models and algorithms with industry partners.