The Mapping and Enabling Future Airspace (MEFA) research program is designed with the goal of assessing the 3D staring radar for the detection and tracking of individual birds, individual birds in small groups, and trajectory behaviours in large flocks. This is in collaboration with colleagues at the Schools of Geography, Earth and Environmental Science, as well as the School of Biosciences at the University.

In order to understand the radar signatures of birds, and to begin building a dataset of control bird targets for classification, an experiment was conducted in which birds of prey were flown by professional handlers from the International Center for Birds of Prey (ICBP). Four birds with differing flight behaviours were flown, each equipped with GPS tags to provide accurate location data which could be correlated with the tracker output of a staring L-Band radar.

In order to understand the radar signatures of birds, and to begin building a dataset of control bird targets for classification, an experiment was conducted in which birds of prey were flown by professional handlers from the International Center for Birds of Prey (ICBP). Four birds with differing flight behaviours were flown, each equipped with GPS tags to provide accurate location data which could be correlated with the tracker output of a staring L-Band radar.

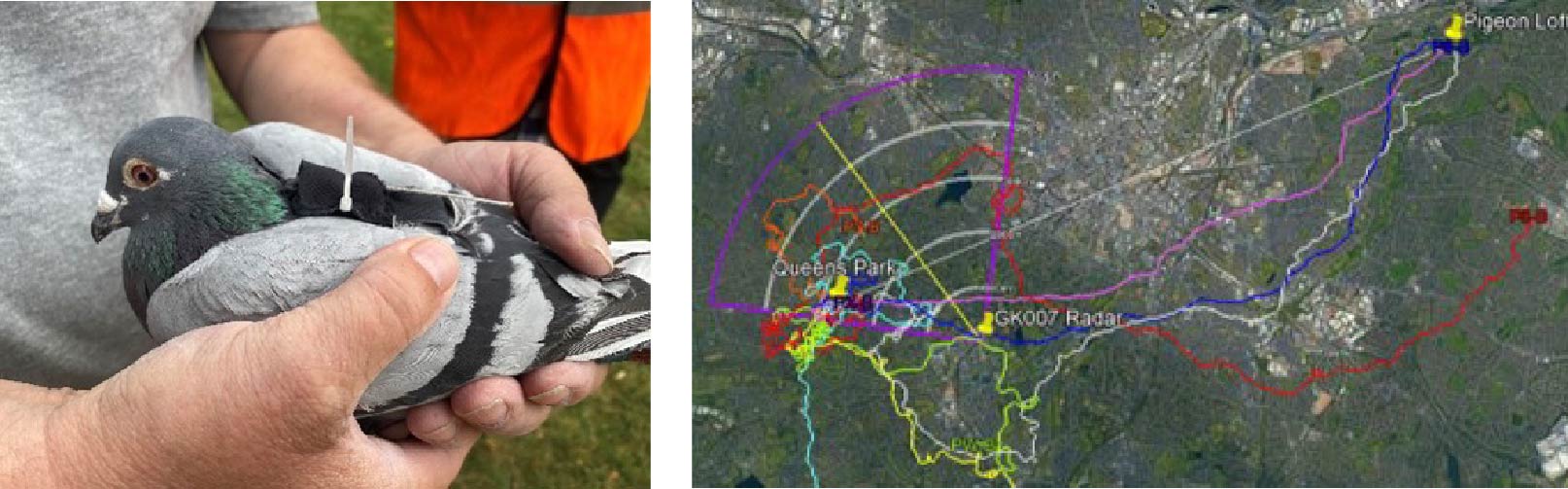

In addition to birds of prey, a similar experimental trial was conducted in which racing pigeons, equipped with GPS tags, were flown from a release location in the field of view of the radar back to their loft. These pigeons were detected and tracked by the radar, and the resulting spectrograms can be used to further develop classification algorithms.

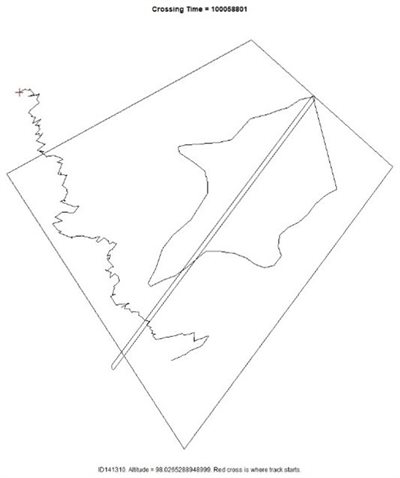

As well as the measurement of control bird targets, in which the identification and GPS location is known, the MEFA project has collected an enormous catalogue of detection and tracking data for opportune birds, every day the radar is operational, throughout the year. In order to label some of this data we developed a line transect method in which an observer records the time and position of an identified bird crossing a virtual line. With this method, and together with the tracks produced by the radar over the seasons, ecologists will be able to produce a measure of the biomass of birds in the field of view of the radar.

As well as the measurement of control bird targets, in which the identification and GPS location is known, the MEFA project has collected an enormous catalogue of detection and tracking data for opportune birds, every day the radar is operational, throughout the year. In order to label some of this data we developed a line transect method in which an observer records the time and position of an identified bird crossing a virtual line. With this method, and together with the tracks produced by the radar over the seasons, ecologists will be able to produce a measure of the biomass of birds in the field of view of the radar.

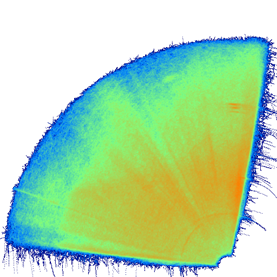

The trajectory characteristics of the known bird targets can also feed into the classification of different bird species observed by the radar. To characterise the many tracks produced by the radar over long time periods, we developed a tool to generate heat maps of activity. These heatmaps can be filtered by time as well as track characteristics such as height and velocity. This allows us to build up a picture of urban bird activity and study how the activity changes over time.

The trajectory characteristics of the known bird targets can also feed into the classification of different bird species observed by the radar. To characterise the many tracks produced by the radar over long time periods, we developed a tool to generate heat maps of activity. These heatmaps can be filtered by time as well as track characteristics such as height and velocity. This allows us to build up a picture of urban bird activity and study how the activity changes over time.